Lawyers Weigh In On Regulating Artificial Intelligence

Intro

Artificial intelligence (AI) is all the talks these days, with its doom and gloom, profits and perils. Everyone uses it (smartphone, Google search, GPS and more). Many are perturbed about it, thinking it is a runaway train. In case you are wondering if AI will take your job, this site will let you know the probability of that scenario: Will Robots Take My Job

Aside: for statisticians, the probability is 22%.

Within the data science community, discourse on algorithm ethics are common-place, and that should be reassuring. The pace of research and progress is dizzying. Just in the past week, the story broke that OpenAI, a non-profit AI research company, decided against releasing its latest natural language model, GPT-2, due to concerns about its potential misuse. The public debate has been fierce:

- OpenAI built a text generator so good, it’s considered too dangerous to release Feb 2018

- Ilya Sutskever twitter thread on resistance to transparency and reproducibility in AI

- Jeremy Howard twitter thread analysis of the decision and debate

Attorneys are a vital party in the AI discussion. Fordham Law School in New York City held a symposium on February 15, 2018 entitled Artificial Intelligence, Robotics, and the Reprogramming of Law, which was free and open to the public. The event was recorded, and full proceedings will be published in Fall 2019.

Executive Summary

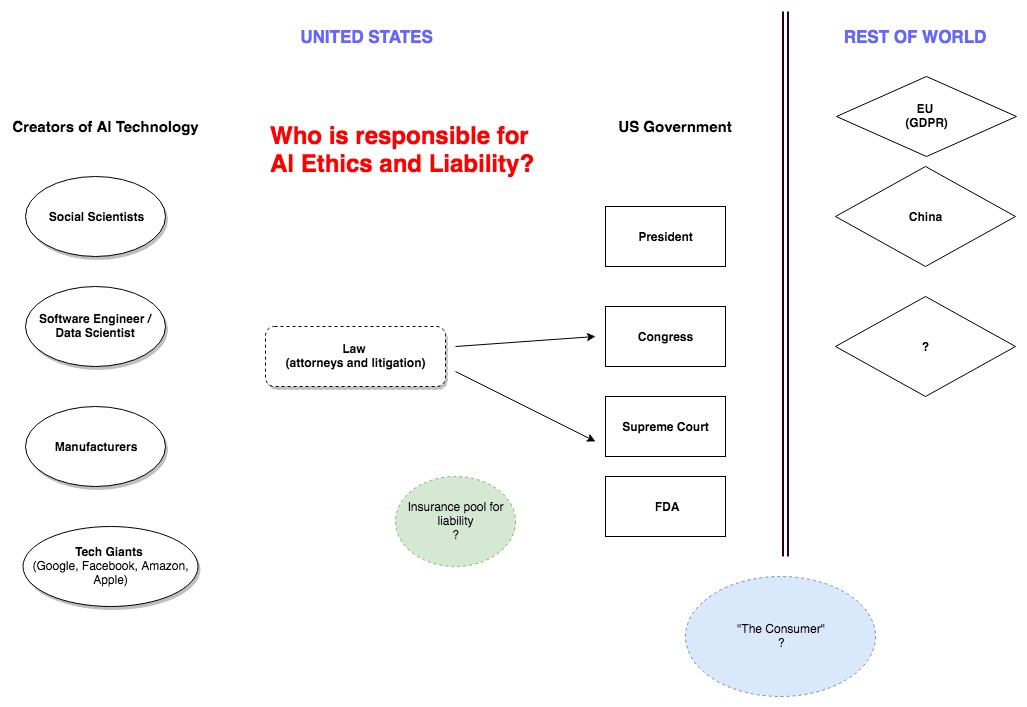

Here are the key takeaways and perspectives from attorneys regarding AI ethics and liability:

Complexity

- Law policy is complex

- AI is changing so rapidly

- Law should resist being overly technical or detailed on policy

- Example: European regulation spans over 2000 pages long.

- Example: waiting for SCC to make decision on what blockchain is. And then blockchain changes their technology to avoid the decision; this is the reality of what is happening

- Determine whether AI is really different. Is there such a thing as “AI Exceptionalism”? Or can we utilize existing laws?

- Use existing laws and expand them for AI

- AI law can draw on privacy, tort and copyright law

- Since people are more biased than AI, the best plan of action is to combine human input with AI

- Interconnectedness: AI is connected by various technologies: software, sensors, developers, users and more. Many parties need to be involved in the ethics and liability discusssions

Stakeholders

- Attorneys are thinking about it

- The “younger” generation will push policy forward, along with their educators

- The US government needs to invest more in cybersecurity over building a border wall as cybersecurity poses a greater threat to the country

- Admission by attorneys that the law has been slow to respond because many legal jobs can be replaced by AI automation

AI Liability and Compensation

-

Not all harm from robots and AI is predictable

- Insurance is one way to deal with damage from AI; establish compulsory insurance pools

- Admission by attorneys that AI policies are likely to be implemented only when the loss of life due to AI occurs

Law Policy Is Complex

Huu Nguyen, a computer scientist-turned-attorney states: We as programmers, lawyers, are ALL RESPONSIBLE. Let’s figure out how to deal with it.

- AI should be safe

- AI should be ethical (but that varies, in USA and China)

- We need Accountability, Controls and Oversight (that means we need people to oversee AI)

- Law students [are the next generation] and they will deal with what is next.

- Cyber policies exist, but no one is following them. More engagement would be good.

Nguyen shares the example of a coffee maker with AI capability. If the coffee made by this machine is too hot and burns the person, who is liable?

- User, who said “hot”

- Provider of software

- AI as a service companies

- Data provider

- Sensor maker

Options:

- Test it (has its own challenges with any number of possible scenarios, impossible to anticipate and test them all)

- Release the transcript that the AI product was learned from (maybe AI has first amendment rights? How about copyright for the company?)

Dealing with Biases in Machine Learning

Presented by Solon Barocas, Professor of Information Science at Cornell University.

There are numerous examples of biases in algorithms used in machine learning which reinforce negative stereotypes. Here are a few:

- Latanya Sweeney study that found search ads showed repeated incidence of racial bias. (Can computers be racist?)

- Natural Language Processing (NLP) co-occurrence (assuming [man –> doctor], [woman –> nurse] even if that is not the case)

- Accuracy for identifying gender: works less well for women and less well for dark skinned people

- Offensive classifications: person in image mistakenly tagged as gorilla

- Language translation: assigning a baseline norm vs outlier; “ethnic food” vs “normal food”

These are possible prescriptions to address the biases:

- Do nothing

- Improve accuracy (add more data, better learning)

- Blacklist (exclude problematic tags)

- Scrub to neutral (break problematic associations)

- Representativeness (create a statistically representative distribution for training data)

- Equal representative (create equal representative of all groups)

Barocas made two insightful points:

- Anti-discrimination laws in the US have generably been ineffective.

- If a group of people is being excluded, ask this other question: Why aren’t people in this group? and examine those causes.

Transparency, Accountability and Governance

Presented by Edward Felten, Professor of Computer Science and Public Affairs at Princeton University

There are three possible approaches to develop safety critical systems:

- Transparency

- Publish code, let experts look at and study code.

- Looking at or studying code is known as “static analysis” and has its practical limiations

- Provide Hands-on System Access to Auditors

- Give auditors access to the system

- Pros: can be powerful for some purposes

- Cons: may not test all scenarios and it is weak for “fairness” property, is limited and inadequate

- Due diligence on engineering process

- Consideration on how the system was designed and the methods used

- Look at the process and what it leads to

- Cons: it is an immature discipline

Felten says all of the proposed approaches above are weak. He suggests designing a system that is analyzable and governable.

Privacy Paradox

Presented by Ari Ezra Waldman

Waldman is both a law professor and sociologist. He presents the following paradox:

- If we think that algorithm decisions are legitimate, we will continue to let those algorithms be used.

- But, what makes us feel that these decisions are legitimate?

- Once humans are removed from decision making process, legitimacy in algorithms drops. And yet, people generally feel more comfortable with algorithms.

Consumers are not well educated or informed on AI, and this contributes to the lack of policy advancement.

Summary

The AI ecosystem is complex. Therefore, methods of addressing AI harm and liability are also complicated. Cross-border policies need to be considered because the scope of AI is world-wide. In summary, everyone is responsible for AI technology. It is not clear when regulation will be developed. The expectation is that significant changes in AI policy will be developed when loss of life occurs.

Definitions / Laws

A cyber security policy (cover a business’ liability for a data breach in which the firm’s customers’ personal information, such as Social Security or credit card numbers, is exposed or stolen by a hacker or other criminal who has gained access to the firm’s electronic network) outlines the assets you need to protect, the threats to those assets and the rules and controls for protecting them and your business. The policyshould inform your employees and approved users of their responsibilities to protect the technology and information assets of your business. ref

A tort is an act or omission that gives rise to injury or harm to another and amounts to a civil wrong for which courts impose liability. In the context of torts, “injury” describes the invasion of any legal right, whereas “harm” describes a loss or detriment in fact that an individual suffers.1 ref

The Privacy Paradox The privacy paradox – Investigating discrepancies between expressed privacy concerns and actual online behavior – A systematic literature review ref

Work Made For Hire (WMFH)

“Work made for hire” is a doctrine created by U.S. Copyright Law. Generally, the person who creates a work is considered its “author” and the automatic owner of copyright in that work. However, under the work made for hire doctrine, your employer or the company that has commissioned your work, not you, is considered the author and automatic copyright owner of your work. ref

EU General Data Protection Regulation (GDPR)

a new set of rules designed to give EU citizens more control over their personal data ref

Biometric Information Privacy Law

The Biometric Information Privacy Act (BIPA) was passed by the Illinois General Assembly on October 3, 2008. BIPA guards against the unlawful collection and storing of biometric information ref

Gramm-Leach-Bliley Act (GLBA)

Protects the personal data of individuals ref

Unfair, Deceptive or Abusive Acts and Practices (UDAP)

prohibits “unfair or deceptive acts or practices in or affecting commerce.” ref

References

-

EU expert group wants your say on draft AI ethics guidelines, Dec 2018

-

U.S. Senator Schatz Introduces Bill to Protect Consumers’ Information Online, Dec 2018

-

Edward Felten (Princeton University) talk at NeurIPS 2018, Dec 2018

-

Lail Brainard (Federal Reserve Board of Governors): What Are We Learning about Artificial Intelligence in Financial Services?, Nov 2018

-

Weapons of Math Destruction podcast, Nov 2018

-

New York City’s Bold, Flawed Attempt to Make Algorithms Accountable Dec 2017

-

Computer paints ‘new Rembrandt’ after old works analysis , Apr 2016

-

Facebook and YouTube should have learned from Microsoft’s racist chatbot , Mar 2018

-

Algorithms & Accountability Conference: NYU School of Law, Feb 2015

Leave a Comment